The rise of artificial intelligence (AI) in the banking industry has precipitated complex questions around trust, security, and its ethical deployment in a heavily regulated sector. In an industry in which customer trust is critical, bank leaders must find a way to navigate the technology’s challenges by balancing innovation and responsibility, according to Theodora Lau in Fintech Futures.

Leveraging AI in the Financial Industry

As it has in numerous other industries, AI has transformed banking by offering tech advantages whose benefits include increased efficiency and reduced costs. Chatbots are now handling a wide range of inquiries in the financial services industry, freeing up human agents to focus on more complex tasks. AI tools have also been deployed to address tasks that include:

- Improving the credit scoring process

- Flagging fraudulent transactions

- Personalizing financial products to meet customers’ specific needs

AI’s Transparency Problem for Banks

The same tools that enhance customer service and streamline operations, however, also raise red flags. A key concern is the lack of transparency in how these AI models, particularly Large Language Models (LLMs), operate.

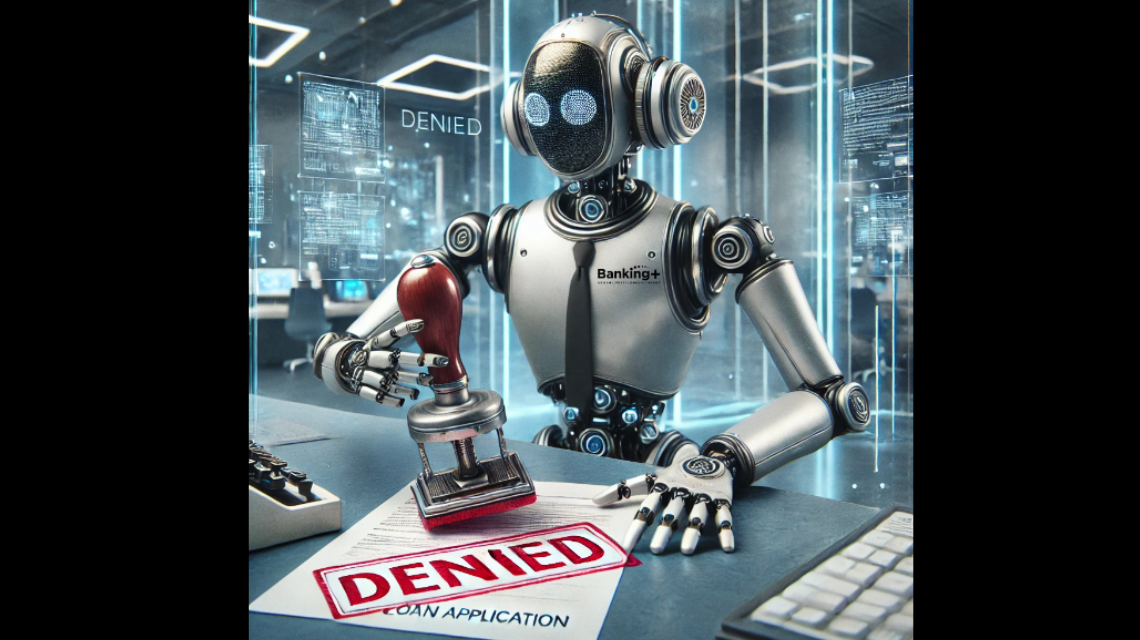

For example, while AI can make credit decisions faster, it’s often unclear how these decisions are reached. In a sector where compliance and accountability are nonnegotiable, banks must be able to explain the rationale behind AI-driven decisions, especially when they affect someone’s financial livelihood.

AI Bias and Fairness: A Growing Concern for Banks

One of the biggest challenges for banks using AI is the risk of perpetuating bias. If an AI system is trained on data that reflects historical discrimination, it could unintentionally reinforce existing inequalities.

For instance, certain demographics could face unfair hurdles in accessing credit or loans. Bias in AI decision-making is not just an ethical issue—it can lead to regulatory penalties and erode customer trust.

In response, financial institutions are under pressure to ensure that their AI systems are both transparent and fair. This means carefully selecting training data and constantly monitoring AI outputs to ensure they align with ethical guidelines and regulatory requirements. The reputational and legal risks of failing to address these issues are significant, particularly in a banking sector that is already under scrutiny for systemic biases in lending practices.

The Security of AI in Banking

Security is another critical area where AI plays a double-edged role in banking.

On one hand, AI is invaluable for detecting fraudulent activity in real-time. Machine learning algorithms can analyze transaction patterns and flag anomalies far faster than human analysts ever could. This allows banks to prevent fraud before it affects customers.

The Threat of Voice Cloning with AI

On the other hand, AI itself can be exploited by malicious actors. One alarming development is the rise of voice-cloning AI, which can mimic a person’s voice after hearing just a short sample. This opens the door for sophisticated scams, such as using cloned voices to trick call center agents or customers into sharing sensitive financial information. In a sector that prioritizes confidentiality and trust, such vulnerabilities are a growing concern.

The Rise of Sophisticated Phishing Attacks with AI

Moreover, AI-generated phishing emails and fake customer service interactions are becoming more convincing, further complicating the security landscape. Banks must ensure that their AI systems are designed with robust security measures from the ground up, rather than treating security as an afterthought. For financial institutions, a breach of trust can have devastating long-term effects, driving away customers and inviting regulatory scrutiny.

Banking AI and Regulatory Risk

The financial sector is one of the most heavily regulated industries, and for good reason. Ensuring that AI systems comply with regulatory standards is a key challenge for banks. Missteps in AI deployment can lead to hefty fines, especially if AI-driven decisions are found to be discriminatory or in violation of privacy laws.

The Rise of AI Regulation for Banks

Regulators are increasingly focused on how banks use AI, particularly in areas like lending, risk assessment, and customer data management. There is growing scrutiny of how AI models are trained, whether they introduce bias, and how transparent they are to both customers and regulators. Financial institutions that fail to address these issues may find themselves liable for regulatory penalties.

Ethical AI for Banks

At the same time, banks must ensure that AI systems are deployed ethically. This includes not only safeguarding against bias and security vulnerabilities but also ensuring that AI doesn’t contribute to the economic divide. For example, as voice-enabled payments and other AI-driven innovations are introduced, banks must ensure they are accessible to all customers, regardless of language or tech proficiency.

A Responsible Approach to AI in Banking

While AI offers significant opportunities for innovation, banks cannot afford to rush into adoption without careful consideration. Financial institutions must implement guardrails—such as regular audits, transparency requirements, and ethical AI frameworks—to ensure that these tools enhance rather than erode trust.

Ultimately, the future of AI in banking hinges on the industry’s ability to balance innovation with responsibility. As AI continues to evolve, banks must remain focused on building systems that are secure, fair, and transparent. In doing so, they can not only harness the benefits of AI but also safeguard the trust that is the cornerstone of the banking relationship.

Author Theodora Lau’s full analysis of the banking industry and its use of AI is available at Fintech Futures.